These are my thoughts so far of the pros and cons of each deployment mechanism for Azure resources. There are third party tools out there that serve help with the development of ARM Templates, but Bicep is the open-source Microsoft recommended approach. In our comparisons we're leaving out Terraform since I haven't had much experience with it and the focus is on Azure only and not other Cloud providers. I am also leaving out the Azure SDK for this type of automation as I feel that they do not shorten the development loop due to the need to actually compile code before being able to test.

Ensure you have the latest Azure CLI or Azure Powershell installed and Bicep installed/upgraded:

az upgrade

az bicep install

or

az bicep upgrade

Scripts

Scripting the resource creation through an imperative method either through Azure CLI or Azure Powershell.

Pros

- Easily testable locally allowing for quick inner-loop when developing.

- Both Azure Powershell and Azure CLI commands are idempotent and the wrapping script can be written to be written to be so as well.

- Flexibility.

- Easy to parse response (from JSON to PsCustomObject) so data that you need to know for other resources are easily available.

- Every resource is created/managed within the script (unlike resource groups with ARM templates).

- You can create the resource group and KeyVault and depending on your requirements. Actual secrets population would probably be manual and the KeyVault being the central persistent store.

Cons

- Possible lag time before resource creation and referencing that resource such as creation of a service/webapp and then referencing the URI as backend for a Front Door resources.

- Thought/effort needs spent on how to centralize common modules/logic for resource creation. For example, you may have common custom logic that would be accessed by the main script. These would probably best live in a separate git repository and made available through Azure Artifacts or pulled down through Invoke-WebRequest/Curl (requires your own manifest of what files you need and the hassle that comes with that).

- An upgrade to the Azure CLI may break existing functionality. Some resource management may not be available unless Azure CLI is updated.

- Some command-lets or commands are not a 1-to-1 interface to the REST API. This is apparent with

az monitor diagnostic-settingswhere it is expected that the--logsand/or--metricsis expected to be JSON.

Be ready to deal with some escaping issues. Notice that the hard coded string requires double quotes and there is a whitespace between$logs = '[{""category"": ""FrontdoorAccessLog"", ""enabled"": true, ""retentionPolicy"": {""enabled"": false, ""days"": 0 } } ]' az monitor diagnostic-settings create --resource $cosmosId ` -n $name --event-hub $dsEventhubNamespace.Name --event-hub-rule $authRules.Id ` --logs $logs,and:characters. Alternatively you can have a more readable configuration by creating an object array which is then converted to JSON if Powershell is used:$logs = @( @{ category='FrontdoorAccessLog' enabled='true' retentionPolicy=@{ days=0 enabled='false' } } ) $logs = (ConvertTo-Json -Compress -InputObject $logs).Replace('"', '\"') - Depending on how the scripts are written could be brittle. Flexibility is a double edge sword.

Neutral

- Each component can be unit testable through frameworks like Pester, leaving out the Azure dependency.

- End-to-End Testing can be easy or difficult depending on how your script is designed and how many resources you have to create or recreate.

- Only wrap around functionality that you require at the moment since the sheer number of parameters/options can be overwhelming. Stick to the defaults unless override/definition is needed.

az deployment group create -g MyResourceGroup -n MyDeploymentName --template-file arm_template.json --parameters @arm_template.parameters.json

Note the @ in this case is the Splat operator similar to Ruby or the Spread operator in Javascript.

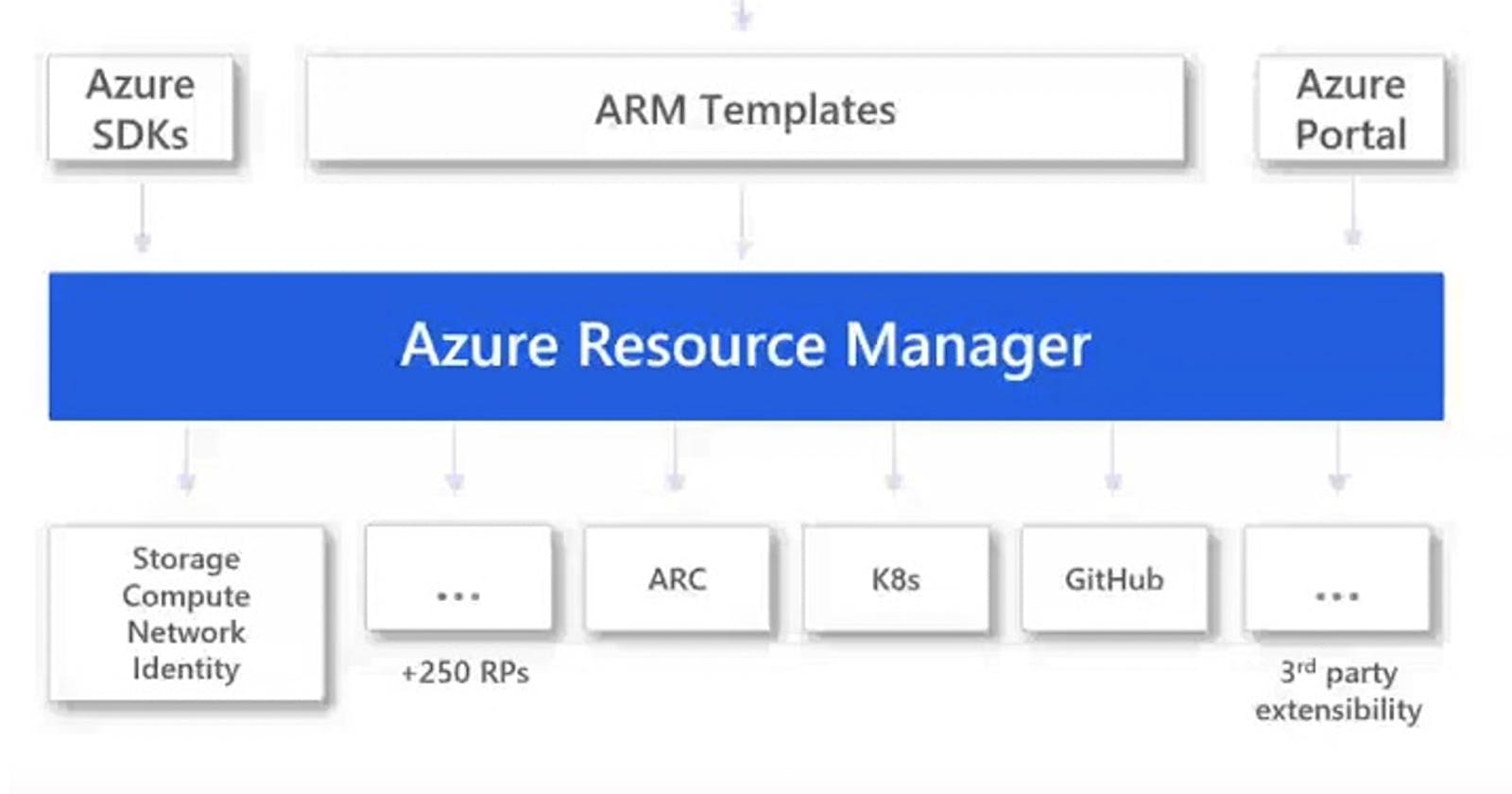

ARM Templates

The standard declarative process for resource deployment.

Pros

- Declarative

- Defines what the end state should be of the deployment.

- Should be easier to tell what resources are created and how than scripts.

- Can modularize/abstract resource templates to their own file via linked templates. See Neutral section.

- Linked templates can be via URI or relative path (as part of a Template Specs).

- Template Functions and User-defined Functions available.

- These have grown and become more reliable since initial release.

- The template can be validated through Azure CLI or Azure Powershell without actual deployment.

- Visual Studio and VS Code extensions to help with syntax and schema detection/enforcement.

- There are many ways to start. You can export existing resources as ARM Templates from the Azure Portal, CLI, or Powershell. The azure-quickstart-templates can also help.

Cons

- Templates that have a lot of resources that are nested are hard to read.

- Relative path for linked templates are not currently supported locally.

- Output parameters that are needed from one resource after it's creation to another is available, but the syntax to access is verbose and sometimes not clear so the documentation for that resource or StackOverflow must be searched.

- Schema and API versions determine what is supported and this is sometimes not clear on how to determine. Example

diagnostic settingsfor Monitoring. - Schema differences between one resource and another for the same feature/functionality such as NetworkRules which requires mapping.

- Testing locally is only possible if you have nested templates. I've had no luck with the

templateLink:Urioption as it will either give me a python error via Azure CLI or state that local linked files are not supported.- You can use ARMLinker , but when I tested it with a simple template and linked template with relative path, it didn't generate a template with the nested linked template. *

- The Template Functions will let you achieve the logic you want, but can be hard to read:

"service_plan_name": "[toLower(concat(variables('prefix'), 'plan', parameters('environment'), parameters('service_name')))]",var service_plan_name = toLower('${prefix}plan${environment}${service_name}')

Neutral

- The resource group is expected to be created or specified upon deployment of the resources. You can create them explicitly through an ARM Template, but it is separate from your main resources.

- The secrets storage through KeyVault is expected to be populated separately. The KeyVault itself is created separately and likely beforehand since the secrets will be used in other resources.

- For secrets that haven't been generated yet like EventHub connection strings or CosmosDB keys, these would be populated after those resources are created through Outputs.

- There are limits to how many parameters, variables, output values, and file size if you choose not to use linked templates.

- Lack of Switch functionality which makes it difficult for chained IF conditionals.

- VS Code's Format Document (Shift+Alt+F) is your friend.

- It seems that Microsoft really wants you to either store your ARM templates in one of the following options below. This makes sense for consolidating common templates that only have differing parameters. However, the second and third options are an additional hop that could be avoided if relative paths were supported in general (supposedly in the works and is currently only supported for the ARM Template Spec).

- A publicly accessible URI (Github).

- Azure Storage Account with SAS token.

- ARM Template Spec.

- This option is extra effort, but is the recommended path forward. If you want to keep your templates in source control (and you should) and provide the option to deploy from them through Azure DevOps pipeline, you'll need separate automation to update the Template Specs from what is checked into github through some sort of automation (build pipeline). This options provides the advantage of versioning and role-based access. Once templates are added to the spec, they can be referenced by resource ID as to be shared between multiple main templates as well. I can understand the push for this option, but it may not be worth the effort, if you don't care about your templates being publicly accessible (there shouldn't be hard-coded secrets in them).

You can chain IF functions like so:

"env": "[

if(

equals(

variables('environment'), 'test8'), 'dev',

if(

equals(

variables('environment'), 'test14'), 'test',

if(

equals(

variables('environment'), 'test'), 'cert',

if(

equals(

variables('environment'), 'load'), 'load',

if(

equals(

variables('environment'), 'stage'), 'stage',

if(

equals(

variables('environment'), 'prod'), 'prod', variables('environment')

))))))]",

The same can be done with the following, but VS Code will warn you that the variable has already been defined. May be able to ignore this in the settings for the Azure Resource Management extension.

"env": "[if(equals(variables('environment'), 'test8'), 'dev', variables('environment'))]",

"env": "[if(equals(variables('environment'), 'test14'), 'test', variables('environment'))]",

"env": "[if(equals(variables('environment'), 'test'), 'cert', variables('environment'))]",

"env": "[if(equals(variables('environment'), 'load'), 'load', variables('environment'))]",

"env": "[if(equals(variables('environment'), 'stage'), 'stage', variables('environment'))]",

"env": "[if(equals(variables('environment'), 'prod'), 'prod', variables('environment'))]",

Bicep

Bicep is a domain-specific language that I first heard about on (MSDev Show Podcast)(play.acast.com/s/msdevshow/c61a21a8-4b05-40..) and was officially released on 3-22-2021. The language is transpiled into JSON/ARM Templates similar to how Typescript transpiler to Javascript.

Pros

- Less verbose than JSON and easier to read.

- Ability to split into modules similar to linked templates with the advantage of referencing them locally with relative paths unlike ARM templates.

- The template can be validated through Azure CLI or Azure Powershell without actual deployment.

- Template Functions and User-defined Functions available.

- Many options to get started from scratch with Visual Studio and VS Code extensions to help with syntax and schema detection/enforcement to exporting from existing resources as ARM and transpiling to Bicep files.

- Can transpile from .json to .bicep with the

decompilecommand or from .bicep to .json withbuildcommand:az bicep decompile -f .\arm_template.jsonaz bicep build main.bicep

Cons

- Output parameter names suffer from same issue as ARM Templates, but syntax is easier.

- If there is an error in your ARM Template you'll either get an error or warning when decompiling and even without errors, it's a best-effort attempt:

WARNING: Decompilation is a best-effort process, as there is no guaranteed mapping from ARM JSON to Bicep. You may need to fix warnings and errors in the generated bicep file(s), or decompilation may fail entirely if an accurate conversion is not possible. If you would like to report any issues or inaccurate conversions, please see https://github.com/Azure/bicep/issues.

Neutral

- The resource group is expected to be created or specified upon deployment of the resources.

- The secrets storage through KeyVault is expected to be populated separately. The KeyVault itself is created separately and likely beforehand since the secrets will be used in other resources.

- For secrets that haven't been generated yet like EventHub connection strings or CosmosDB keys, these would be populated after those resources are created through Outputs.

az deployment group create -g MyResourceGroup -n MyDeploymentName --template-file main.bicep --parameters @parameters.bicep

Summary

All three options are necessities for having a reproducible Azure resource deployment that may be leveraged for multiple prod and non-prod environments. With each of these approaches consider the ease of testing locally and the process in which the deployment can be initiated conditionally through Azure DevOps via a Build or Release Pipeline.

Azure CosmosDB is pain to create and remove for testing that logic out since it takes a while (delete can take around 20-30 min with no data stored). Workaround is to create new resources with different names while the removal process is occuring in Azure. Note this lengthy removal time is specific to Azure in general (ARM/Bicep/Scripts).

With both ARM templates and Bicep templates deployment failures will can be investigated through the Azure Portal, CLI, Powershell, or a direct HTTP REST call: This is primarily what I use to determine errors in the deployment:

az deployment group create -g MyResourceGroup -n MyDeploymentName --template-file payments_token.json --parameters '@payments_token.parameters.json'Look for the

provisioningStateproperty for failure to trace down cause.Template Specs are not perfect and if you choose to go this route to share common templates, there will be some gotchas. One example is creating two resources with different names in the main template .

The artifact path 'linkedTemplate.json' is not supported. An artifact path should be unique and not contain any expressions.Workaround is to remove the duplicate resource and add it back in manually in the main template via the Portal.

When referencing KeyVault with SecretUri the Secrets Permissions can just be GET. When referencing KeyVault with the following:

@Microsoft.KeyVault(VaultName=myvault;SecretName=mysecret)Both GET and LIST permissions are required.

Calling this out since documentation doesn't really stress this. These two statements are equivalent:

"id": "[concat(resourceId('Microsoft.Network/virtualNetworks' , variables('virtual_network_name')), '/subnets/', variables('subnet_name'))]","id": "[resourceId('Microsoft.Network/virtualNetworks/subnets' , variables('virtual_network_name'), variables('subnet_name'))]",

References

- feedback.azure.com/forums/281804-azure-reso..

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- github.com/pester/Pester

- github.com/lars-erik/ARMLinker

- docs.microsoft.com/en-us/powershell/module/..

- docs.microsoft.com/en-us/cli/azure/group/de..

- github.com/Azure/azure-quickstart-templates

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- docs.microsoft.com/en-us/azure/azure-resour..

- github.com/Azure/template-specs/issues/49